By Shane He (WI Rapporteur, Nokia), Srinivas Gudumasu (InterDigital), Saba Ahsan (SA4 RTC SWG Chair)

First published June 2025, in Highlights Issue 10

Split rendering is a technique where the processing of XR content is distributed between the user device (e.g., AR glasses) and the network (e.g., edge or cloud servers) in order to reduce the computational load on the device. Split rendering can improve the user experience for devices with limited computation by leveraging the network's processing capabilities as well as reduce battery consumption on the end device.

With Rel-18, IMS-based AR real-time communication (TS 26.264) introduced support for split rendering. In Rel-19, SA4 has defined a generic split rendering service for XR media, including real-time and non-real-time XR, in TS 26.567. The new specification utilizes the IMS data channel for providing the split rendering service and adds new features for improved performance.

Key use cases enabled by this specification include XR services in industrial (e.g., for monitoring, maintenance, collaboration, and teleoperation), enterprise and educational environments; entertainment use-cases, including cloud-gaming, and shared and collaborative entertainment and productivity XR services, where users gather in a shared space and interact with each other and with the environment.

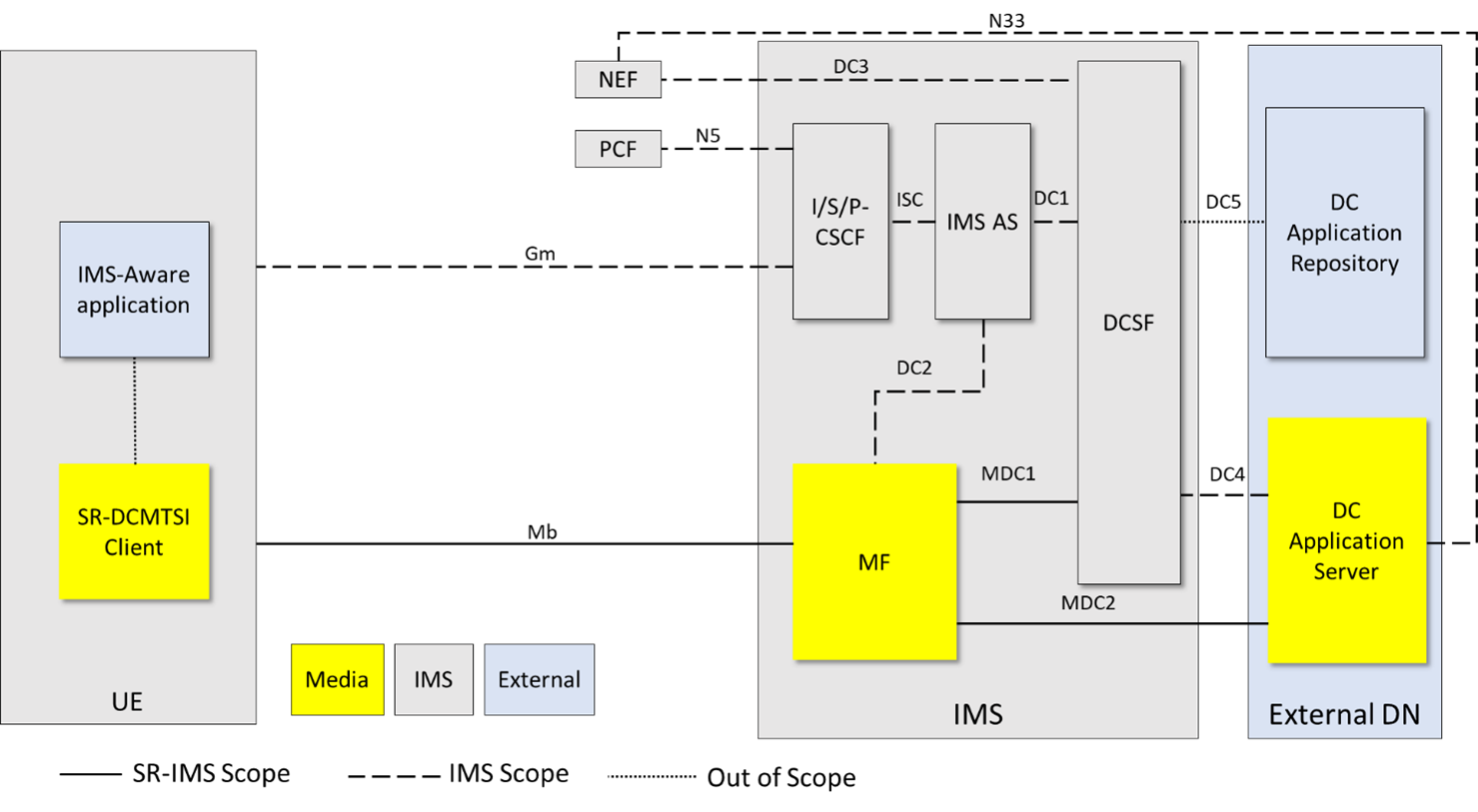

Figure 1 illustrates how the split rendering functions are mapped to a generalized IMS DC (Data Channel) architecture. A Split Rendering DCMTSI Client (SR-DCMTSI) is an MTSI client, as specified in TS 26.114, that additionally supports IMS data channel for split rendering capabilities. The Media Function (MF) is responsible for interacting with clients during the split-rendering process, managing resources, and running the rendering process.

The DC Application Server (AS) controls split-rendering services, including session media control and capability negotiation. TS 26.567 provides codecs and media transport protocols for delivery of split-rendered media and metadata of the split rendered content. Furthermore, it contains procedures of split rendering session establishment, modification and adaptation. In addition, the specification provides the list of QoE metrics that can be collected and reported from an SR-DCMTSI client and the configuration information required for enabling QoE metrics collection and reporting feature.

Overall, 3GPP TS 26. 567 serves as a comprehensive guide for network operators, service providers, media application providers and equipment manufacturers to develop and deploy IMS-based multimedia telephony services for split rendering, ensuring consistent performance and interoperability across the ecosystem. It is expected to bring several significant impacts and benefits for the operators as well as users, e.g.:

- Enhanced User Experience: Split rendering allows for more efficient processing and rendering of multimedia content by distributing the workload between the client and the server. This can lead to smoother and higher-quality multimedia experiences for end-users, particularly in applications like augmented reality (AR), virtual reality (VR) and extended reality (XR). Adaptation of rendering tasks between the end device and network ensures the final rendering is optimized based on network conditions and capabilities.

- Reduced Latency: By offloading some of the rendering tasks to the server, split rendering can reduce the latency experienced by users. This is crucial for real-time applications such as gaming, video conferencing, and interactive media, where low latency is essential for a seamless experience. The MF can adjust the processing delay involved in the split-rendering processing tasks based on the end-to-end latency metrics and provides better quality of experience to the users.

- Optimized Network Utilization: Split rendering can efficiently use the network resources by offloading the complex processing tasks to servers and can optimize the network resources usage by reducing the amount of data that needs to be transmitted over the network. This is achieved by sending only the necessary data for rendering, rather than the entire multimedia content, especially by split adaptation. This can lead to more efficient use of bandwidth and reduced network congestion.

- Device Accessibility: Operators can enable running XR services on devices with lower processing power by leveraging server-side rendering capabilities where the server renders the full XR scene. This can lower the cost of devices for end-users and make advanced multimedia services more accessible for new lightweight devices like the AR glasses.

- Interoperability and Standardization: the deployment of split rendering functions in current IMS systems can lead to improved performance, efficiency, and user satisfaction, while also providing operators with opportunities for innovation and differentiation in the competitive telecommunications market.

Figure 1: Generalized IMS DC Architecture to support split rendering

For more from WG SA4 see: www.3gpp.org/3gpp-groups

Technology

Technology