By Imed Bouazizi and Thomas Stockhammer (Qualcomm)

First published June 2025, in Highlights Issue 10

In recent years, Augmented Reality (AR) has witnessed a transition from a niche technology into a mainstream phenomenon, significantly enhancing real-time communications (RTC) with richer and more engaging user experiences.

As AR services proliferate across various devices and form factors, integrating avatars into real-time AR calls has emerged as a critical advance. Recognizing this potential, 3GPP has recently completed the FS_AVATAR study in Release 19, laying essential groundwork for the standardized deployment of avatars within immersive AR communications.

Following this foundational study, the AvCall-MED normative work item was approved to advance avatar integration specifically within IMS-based AR calls. This targeted initiative aims to standardize the representation, animation, and signaling protocols essential for enabling both 2D and 3D avatar communications, thus ensuring broader interoperability among service providers, developers, and end users.

Foundations from FS_AVATAR Study

The FS_AVATAR study, documented in 3GPP TR 26.813, extensively explored the technical landscape necessary for effective avatar communication. Key insights included defining robust avatar representation formats, efficient methods for streaming avatar animations, and establishing essential signaling mechanisms. The study emphasized the necessity of achieving seamless compatibility with existing AR-capable devices such as smartphones, AR glasses, and head-mounted displays (HMDs).

Due to the complexity of this feature, the study's conclusions recommended Release 19 work to focus initially on enabling 1-to-1 avatar communications. This approach will expedite implementation and adoption, providing a robust foundation upon which more complex multi-party scenarios can subsequently be developed.

Avatar Representation Format (ARF)

A central element of this initiative is the introduction of the MPEG Avatar Representation Format (ARF), standardized as ISO/IEC 23090-39. ARF defines a structured and interoperable framework for avatar data representation, including geometric models, skeletal structures, blend shapes for facial expressions, textures, and animation data. This standard ensures avatars are consistently rendered and animated across diverse devices and services, enhancing user experience and service consistency.

ARF supports two container formats: ISOBMFF (ISO Base Media File Format) and ZIP-based containers, enabling efficient and partial access to avatar components.

Objectives of AvCall-MED

In alignment with FS_AVATAR findings and 3GPP WG SA2 architectural enhancements, AvCall-MED targets three main deployment scenarios for avatar communication: Receiver-driven, Sender-driven, and Network-driven avatar rendering and animation.

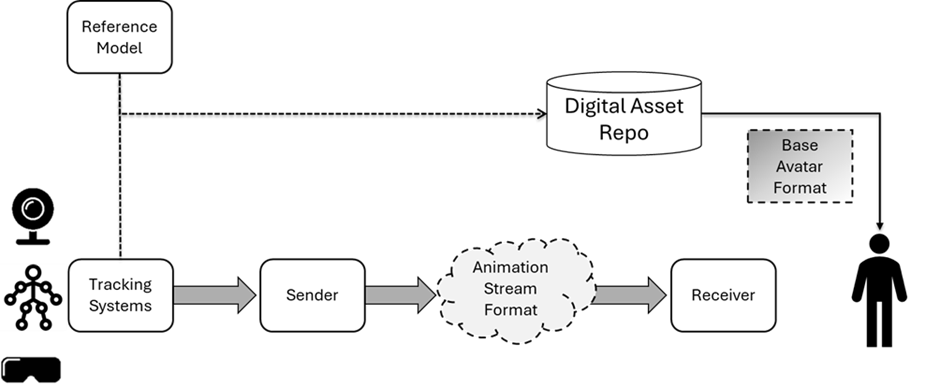

For Receiver-driven avatar communication, AvCall-MED will extend TS 26.264 by specifying the essential formats, signaling protocols, and animation streams tailored for IMS-based AR calls. The animation streams are designed in a way to interoperate with XR runtimes, such as OpenXR [2]. This approach is depicted by the figure below.

In the case of Sender-based avatar communication, AvCall-MED will leverage existing TS 26.114 and TS 26.264 standards. Here, sender devices animate avatars locally, transmitting a final rendered stream. Guidelines will be established to optimize this method, including optional pose data exchange mechanisms. These will be crucial when the receiver’s viewpoint or interactions directly influence avatar rendering on the sender’s side.

For scenarios utilizing Network-based avatar rendering, AvCall-MED will introduce additional extensions to TS 26.264. These will define formats and signaling required for base avatars and animation streams, alongside capabilities for signaling and format negotiation to facilitate Media Function (MF)-based animation and rendering within the network. Network-driven rendering offers distinct advantages, especially for thin AR glasses or other resource-constrained devices, by shifting computational demands onto the network infrastructure.

IMS Architecture Extensions in TS 23.228

To fully support avatar communications, extensions to the IMS architecture have been introduced by 3GPP WG SA2 in TS 23.228. These enhancements define additional signaling and session management capabilities required for handling avatar-related information flows within IMS-based services. These architectural updates ensure the seamless integration of avatar communication functionalities, allowing avatars to be efficiently exchanged, animated, and managed within existing IMS frameworks.

An important enhancement to the architecture is the Base Avatar Repository (BAR) element, which is responsible for the secure storage and management of base avatars, allowing participants in an AR call to access the sender’s base avatar for animation. AvCall-MED is considering the development of an interface to the BAR to facilitate secure management and controlled access to avatar assets. This interface will enable service providers and users to store, retrieve, and dynamically update their avatar data securely and efficiently, significantly streamlining asset management across diverse AR applications and platforms.

Conclusion and Future Work

The AvCall-MED work item represents a significant advancement in 3GPP’s ongoing efforts to enrich RTC through immersive technologies. By addressing core technical requirements for avatar representation, animation, and management using the ARF format and IMS architectural enhancements, AvCall-MED establishes a robust foundation for the future proliferation of immersive communication services.

As follow-up to this work, we anticipate studying the aspects and traffic characteristics of more advanced Avatar representation and animation. The focus will be on the quality of experience, security, and UE characterization for a realistic looking avatar communication experience.

References

- 3GPP TS 26.813: “Study of Avatars in Real-Time Communication Services”.

- The OpenXR™ Specification 1.1, The Khronos® OpenXR Working Group

- 3GPP TS 26.264, “IMS-based AR Real-Time Communication”

- 3GPP TS 23.228, “IP Multimedia Subsystem (IMS); Stage 2“

- ISO/IEC 23090-39:2025 Information technology, Avatar Representation Format.

For more from WG SA4 see: www.3gpp.org/3gpp-groups

Technology

Technology